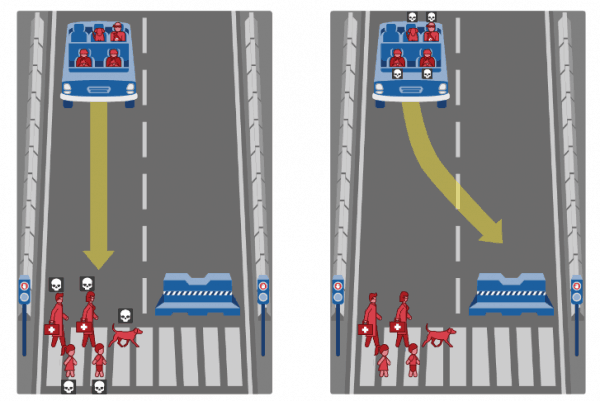

The Trolley Problem is a famous ethic thought-experiment. Here how it goes: There is a Trolley barreling down the railroad tracks. Ahead, on the tracks, there are five people tied up and unable to move. The trolley is headed straight for them. You are standing some distance off in the train yard, next to a lever. If you pull this lever, the trolley will switch to a different set of tracks. However, you notice that there is one person on the side track. You have two options:

- Do nothing, and the trolley kills the five people on the main track.

- Pull the lever, diverting the trolley onto the side track where it will kill one person.

Which is the most ethical choice?

First elaborated in the mid- XX century, it has regained recent attention thanks to autonomous cars. Without a purely mathematical decision process, the answer is left to ethics: what is right and what is wrong. Personal ethics as well as the ethics of society.

Beyond then just resolving this unique driving problem, it raises attention to artificial intelligence in general. Can we just let artificial intelligence do whatever it wants without some code of ethics? And if we do infuse it with a code of ethics, which one will be, the Judeo-Christian or the Nazi one?

A highly controversial research published in 2016, two Jiao Tong University researchers say they used “facial images of 1,856 real persons” to recognize ” some discriminating structural features for predicting criminality…”. Their conclusion was ” all four classifiers perform consistently well and produce evidence for the validity of automated face-induced inference on criminality, despite the historical controversy surrounding the topic.” In other words, using facial recognition, one could predict the probability of a person committing a crime.

Science without conscience

Beyond the veracity of the study results, the question that should be first asked is: what are the consequences of such research? While this study is just a research paper not intended for practical implementation, it kicks open the door on physiognomy, a pseudo-science often used and abused by racists and supremacists over most of the course of human civilization. Is it a proper course of action to unleash artificial intelligence to support such unscientific conclusion? What if some government decide to implement and arrest citizen just based on their facial features, even though they have and probably will never commit a crime?

Another recent study, this one from Stanford University, revealed that via deep learning facial recognition, a computer could recognize if a man was gay 81% of the time. Using 35,000 images posted on web dating sites, Michal Kosinski and Yilun Wang extracted facial features particular to gay men and women. After comparing the results using human detection, which proved to be less accurate, they concluded that “faces contain much more information about sexual orientation than can be perceived and interpreted by the human brain”. Here again, the researcher never apparently questioned the consequence of their publication.

In both cases, there are procedural flaws. The datasets used are very, very small. A few thousand images to extract a generalization is not enough. As well, while one study used only Chinese samples, the other used only white people on dating sites. Those are very, very limited samples.

There are also dangerous conclusions. If the algorithms are right 80% of the time, they are also wrong 20% of the time. This is a very high margin of error, especially when dealing with whether someone is a criminal or not. Would we be comfortable with a justice system that is wrong 20% of the time?

Deep learning looks for similarity in patterns

When learning to understand new objects, deep learning works by identifying recurring patterns. Throw thousands of images at it and it will identify what is common between all of them, even if there is no real scientifically proven recurring pattern. For example, if needed, we could train an image recognition categorizer to recognize an object called ” Brooka” . Find 1,000 or more random images of anything and run it via a deep learning engine. The resulting common pattern for all these images will thus be recognized as a “Brooka”, although such object doesn’t exist. Because, if instructed, that is what deep learning does. It assumes that there are patterns and will find them, regardless of their existence. In both pieces of research above, the same approach is applied.

Consider the consequences

Because both are scientific papers, they do not consider the ethical implications of their research. Nor do they consider if the research itself is appropriate or practical. In order to be applied to real life, each should need to be 100% accurate. Otherwise, 20% of the population would find themselves misclassified, with sometimes deadly implication.

Already, companies offer commercial applications derived from these research. Faception, for example, claims to have categorizers that can recognize high IQ, terrorists or child molesters solely on the shape of their faces.

Artificial Intelligence without ethics is extremely dangerous. The same way we teach our children what is right or wrong, we should teach our A.I. to make moral judgments. It is not because something can be done that is should be done. Most of us do not spend our days killing everyone we see even though it is something we could easily do. The reason? We function according to a strong code of ethics ( so does our society) that forbids us to do it.

If we want A.I. to be truly intelligent and useful, we should require, as a prerequisite, that it follows the same ethical rules that have guided humanity. Otherwise, it will destroy what has been at the core foundation of human progress. And because image recognition is one of the most visible cases of A.I. implementation, it should lead the way by example.

Photo by Aditya Virendra Doshi

Author: Paul Melcher

Paul Melcher is a highly influential and visionary leader in visual tech, with 20+ years of experience in licensing, tech innovation, and entrepreneurship. He is the Managing Director of MelcherSystem and has held executive roles at Corbis, Stipple, and more. Melcher received a Digital Media Licensing Association Award and is a board member of Plus Coalition, Clippn, and Anthology, and has been named among the “100 most influential individuals in American photography”

2 Comments