As the EU AI Act’s obligations for general-purpose AI models take effect, the age of opaque training data and untraceable outputs is drawing to a close.

The Shifting Sands of AI Regulation

The era of unregulated AI development is coming to an end, at least in Europe. As generative AI capabilities advance at breakneck speed, the European Union has drawn a clear line: if your AI model learns from or generates content, you’re now accountable, not just ethically, but legally.

Contrary to popular belief, the EU AI Act does not ban the use of copyrighted content in AI training. Instead, it imposes transparency and responsibility, aligning with pre-existing copyright law. With the August 2, 2025, deadline approaching, providers of general-purpose AI (GPAI) models must meet new requirements or face steep penalties. This marks a global precedent: the first binding legal framework specifically regulating how AI models handle training data, outputs, and disclosure.

Deconstructing the Myth: “You Can’t Train on Copyrighted Data.”

At the heart of this debate lies a misunderstanding of EU copyright law. The truth is more nuanced, and probably more important.

The EU’s Directive on Copyright in the Digital Single Market (DSM Directive) introduced two key exceptions for Text and Data Mining (TDM), automated analysis of content, including for training AI models:

Article 3: Permits TDM for scientific research conducted by non-profits and educational institutions. This exception cannot be opted out of but has limited scope for commercial AI.

Article 4: Crucially, allows TDM for any purpose, including commercial, unless the rights holder has “expressly reserved their rights in an appropriate manner.” What counts as “appropriate”? Metadata, robots.txt files, or API terms that are machine-readable and clear.

In addition, AI developers may only apply TDM to content they have lawful access to, meaning publicly available content is fair game only if rights are not expressly reserved. Paywalled, restricted, or scraped content without a license remains out of bounds.

This is not a ban. It is a framework designed to protect rights while enabling innovation.

Article 53 of the EU AI Act: Obligations for GPAI Providers

The real game-changer is Article 53 of the EU AI Act. It directly targets developers of General-Purpose AI (GPAI) models, large foundational systems like those from OpenAI, Midjourney, Stability AI, and Google DeepMind.

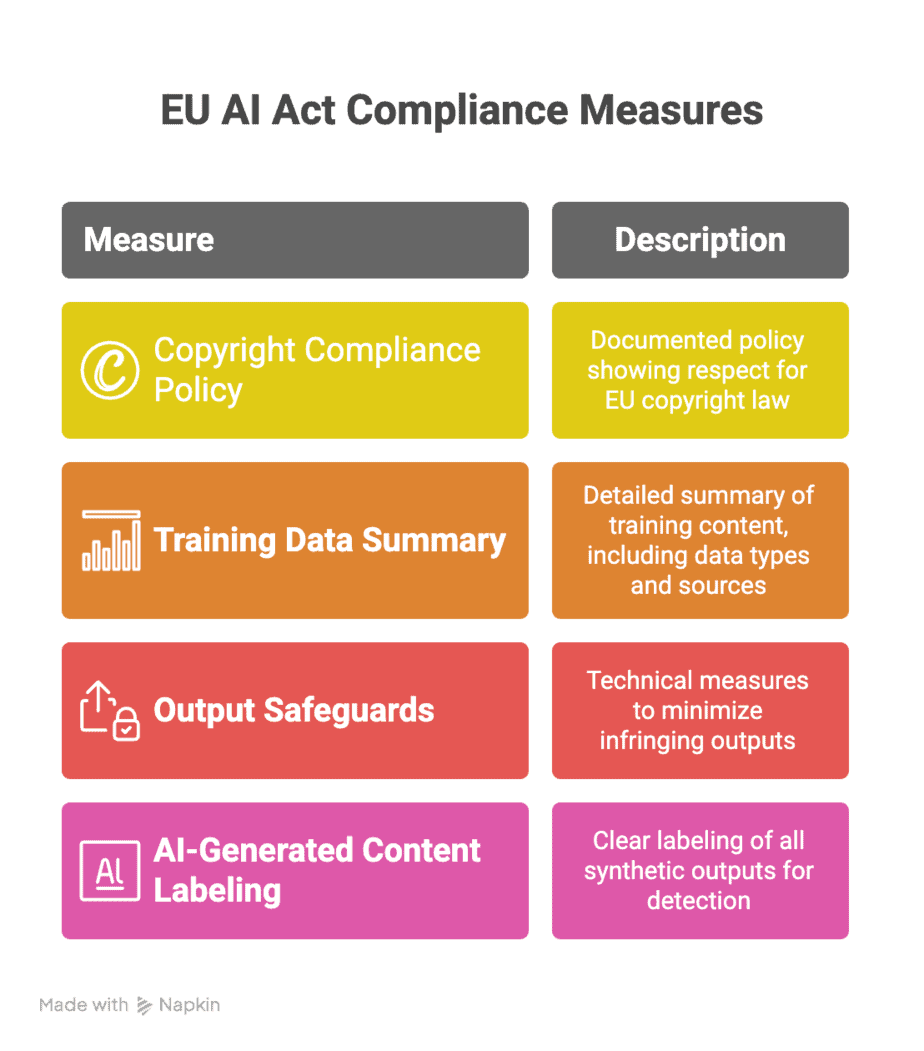

By August 2, 2025, GPAI providers must meet the following obligations:

Copyright Compliance Policy: A documented policy that demonstrates how the provider respects EU copyright law, particularly the opt-out rights outlined in Article 4 of the DSM Directive.

Training Data Summary: A “sufficiently detailed” summary of the content used to train the model. This must disclose the types and sources of data, especially whether copyrighted content is involved. The goal is transparency, not trade secret exposure.

Output Safeguards: Technical measures to reduce the risk of infringing outputs. These might include filters to detect and block replication of copyrighted material or tools to identify generated content resembling known works.

AI-Generated Content Labeling: All synthetic outputs (images, audio, video, text) must be clearly labeled and machine-detectable. This doesn’t just apply to deepfakes, it affects all generative content circulated in the EU.

Together, these measures aim to reinforce trust, prevent infringement, and give rights holders insight into how their work might be used.

The August 2, 2025 Deadline: A Global Watershed

This is more than a bureaucratic milestone. The EU AI Act introduces extraterritorial enforcement: any AI model made available in the EU, regardless of where it was developed, must comply. This means companies headquartered in the U.S., China, or anywhere else are on notice.

It also introduces downstream liability: if your company integrates or distributes a non-compliant GPAI model to users in the EU, you too can be held liable and face penalties under the AI Act.

While fines for some violations may only be triggered later, the core obligations for transparency and copyright compliance kick in on August 2, 2025 ( Although the EU is known to have given grace periods in the past). Failing to comply could result in penalties of up to €15 million or 3% of global turnover, whichever is higher.

Beyond the fines, reputational risk looms large. With governments, publishers, and creators watching closely, non-compliance could compromise market access, partnership opportunities, and public trust.

Strategic Shifts for Developers and Creators

For AI Developers:

Internal Policies: Legal teams must now rigorously evaluate whether and how content used in training respects TDM opt-outs.

Tech Investment: Tools to detect opt-out signals, track dataset provenance, and filter problematic outputs will be essential.

Transparency as a Differentiator: Openness about training practices will evolve from PR strategy to business necessity.

Licensing Models: Expect a surge in data licensing agreements and curated datasets from rights-respecting providers.

For Content Creators and Rights Holders:

Increased Leverage: By embedding opt-out signals, creators can legally shield their work from unauthorized AI training. The IPTC recently a practical and very useful guide on how to proceed. : Generative AI Opt-Out Best Practices.

Monitoring & Enforcement: New compliance disclosures make it easier to identify misuse, and take action.

Revenue Opportunities: With demand for “clean” datasets growing, licensing content for training could become a meaningful income stream for some.

A New Era of Responsible AI

The EU AI Act doesn’t prohibit innovation, it steers it. By demanding transparency, it invites accountability. By aligning AI training practices with existing copyright norms, it empowers creators to assert control over how their works are used.

Because the framework is based on an opt-out mechanism, the burden of enforcement still rests heavily on rights holders. Yet for the first time, there is a structured pathway to regain agency, and to participate meaningfully in the AI economy.

By codifying the labeling of synthetic content, the Act also reinforces public trust at a time when authenticity is under siege.

The EU has drawn the first clear line in the sand between lawful innovation and unchecked exploitation. The question now is not whether others will follow, but who and how.

Author: Paul Melcher

Paul Melcher is a highly influential and visionary leader in visual tech, with 20+ years of experience in licensing, tech innovation, and entrepreneurship. He is the Managing Director of MelcherSystem and has held executive roles at Corbis, Gamma Press, Stipple, and more. Melcher received a Digital Media Licensing Association Award and has been named among the “100 most influential individuals in American photography”